Interacting via the terminal

Bourne Again SHell (BASH)

The standard way to interact with Unix is by entering commands in

a shell. A shell is a terminal window that accepts text entry at the

prompt (in these notes, $). There are many shells available, each of

which has its own syntax and unique features. The historical shells are

the C shell (csh) and the Bourne shell (sh). The default shell

on many BSD Unix systems is the KornShell (ksh). On Linux and (until

recently) macOS, the default is bash, the Bourne Again SHell. For

licencing reasons, macOS has moved to the Z shell (zsh) as of 10.15

Catalina. Many scripting activities that were once written in shell

scripting languages are now often coded in Python, Ruby, or Lua. Still,

shell scripting is ubiquitous, and it’s useful to have some familiarity

with it.

See also

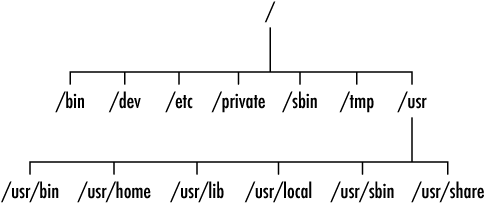

Unix is organized around a file system whose root-level directory is

denoted by a single slash (/). Further levels of subdirectories

(what Windows and Macintosh users call folders) are nested. On most

flavours of Unix, your personal home directory lives at either

/usr/home/$USER or /home/$USER; on macOS, home is

/Users/$USER. In this notation, $USER is a variable holding your

Unix shortname. To save you keystrokes, the home directory is

abbreviated by a tilde (~). The current directory (.) and its

immediate parent directory (..) also have convenient shortcuts.

Partial tree diagram of a typical Unix directory structure

The shell maintains a sense of where you are in the file system. Your

current location, the current working directory, can be queried using

the pwd command. The contents of this directory—typically data

files, program files (executables), or other subdirectories—can be

inspected by typing ls. The cd command changes the current

working directory to a new location that the user provides as an

argument. Commands that produce output write their results to the next

free line of the terminal.

$ pwd # report the current working directory

/usr/home/ksdb

$ cd / # change the current working directory to /

$ pwd

/

$ ls # list the contents of the current working directory

bin sbin

dev tmp

etc usr

private

$ cd ~ # go to the user's home directory

$ pwd

/usr/home/ksdb

$ cd .. # go to the current directory's immediate parent

$ pwd

/usr/home

$ cd /usr/home/ksdb

In the example above, text that follows a dollar sign ($) is

understood to be user input, typed at the keyboard. The explanatory text

has no functional purpose; bash ignores any characters to the right

of a hash (#).

Try this on your own

Draw a directory tree that shows the path leading from the root directory to your home.

Everything in your home directory is open to modification, including the

directory structure. There are commands to create (mkdir) and delete

(rmdir) directories.

$ mkdir test # create directory /usr/home/ksdb/test

$ ls

test

$ cd test

$ ls # there are no contents yet

$ cd ..

$ rmdir test # delete the empty directory /usr/home/ksdb/test

Paths

A path is a chain of slash-separated, nested directory names that is used to specify a location in the file system. A path can be either absolute or relative, meaning that it is expressed in reference to either the root or the current working directory.

$ pwd

/usr/home/ksdb # heierarchy of directories separated by a slash

$ mkdir /usr/home/ksdb/fruit # absolute path

$ mkdir vegetables # relative path

$ cd fruit

$ touch apples # create an empty file /usr/home/ksdb/fruit/apples

$ touch ./oranges # create an empty file /usr/home/ksdb/fruit/oranges

$ ls

apples oranges

$ touch ../vegetables/broccoli

$ ls /usr/home/ksdb/vegetables

broccoli

$ ls ../.. # inspect the directory two levels up

bin sbin

dev tmp

etc usr

private

A file name argument that is not preceeded by a complete path (such as

apples in the example above) is assumed to be in the current working

directory. On the other hand, a command without a path is assumed to be

somewhere in the default search path. The default search path is

specified as a colon-separated list of directories assigned to the

shell variable PATH. When a particular command is issued, each

successive directory in PATH is explored in an attempt to find the

correct executable. The shell’s default path can be modified by the

user.

To run programs of your own, you will have to include the full path to

the program file. The standard Unix commands are exempted from this

requirement. They correspond to a computer program of the same name and

live in one of the bin or sbin directories scattered about the

file system. These directories are included in the default search path.

$ whereis pwd

/bin/pwd

$ whereis mkdir

/bin/mkdir

$ whereis cd

/usr/bin/cd

$ echo $PATH

/usr/bin:/bin:/usr/sbin:/sbin:/usr/local/bin:/usr/X11/bin:/usr/X11R6/bin

$ echo $PATH | rs -c: . 2

/usr/bin /bin

/usr/sbin /sbin

/usr/local/bin /usr/X11/bin

/usr/X11R6/bin

$ mkdir ~/bin

$ export PATH=$PATH:/usr/home/ksdb/bin

$ echo $PATH | rs -c: . 3

/usr/bin /bin /usr/sbin

/sbin /usr/local/bin /usr/X11/bin

/usr/X11R6/bin /usr/home/ksdb/bin

The symbols | rs -c: . 2 following the second call to echo $PATH

cause the colon-separated path list to be reformated into two columns.

Recall that ~ is a way of accessing the user’s home directory. So,

the example has created a new bin directory within the home

directory /usr/home/ksdb. The export command ensures that

changes to the path variable are recognized by all running shells. (More

than one terminal may be open at a time.) PATH is assigned its old

value, accessed with $PATH, with the new directory

/usr/home/ksdb/bin tacked onto the end of the list. Changes to the

path can be made persistent across terminal sessions by adding export

PATH=$PATH:<new search directory> to the .bash_profile file in

your home directory.

Commands

Command invocations have one of the following forms: command, command

option(s), command filename(s), or command option(s) filename(s).

The ls command, for example, accommodates all of them; e.g., ls,

ls -a -l -F, ls myfile.txt, and ls -s myfile.txt.

$ cd ~

$ ls -a

. .bash_profile

.. .xinitrc

.bash_history test

$ ls -aF

./ .bash_profile

../ .xinitrc

.bash_history test/

$ ls -lF /

drwxr-xr-x@ 40 root wheel 1360 15 Dec 13:59 bin/

dr-xr-xr-x 2 root wheel 512 2 Jan 08:53 dev/

lrwxr-xr-x@ 1 root admin 11 22 Jan 2008 etc@ -> private/etc

drwxr-xr-x@ 66 root wheel 2244 15 Dec 13:59 sbin/

lrwxr-xr-x@ 1 root admin 11 22 Jan 2008 tmp@ -> private/tmp

drwxr-xr-x@ 13 root wheel 442 29 Feb 2008 usr/

lrwxr-xr-x@ 1 root admin 11 22 Jan 2008 var@ -> private/var

Options typically begin with a hyphen. In the example above, -a

instructs the ls command to list all files, even the so-called dot

files, which are usually invisible. The option pairs -aF and

-lF are shortcuts for -a -F and -l -F. The -F option

activates additional formatting in the form of a trailing symbol that

identifies the file type: / marks a directory, * an executable

(program), and @ a symbolic link (pointer to another file). The

-l option gives a verbose listing of all the major file attributes.

This information is arranged in seven columns, the first of which

indicates the file type (character 1) along with the owner (characters

2–4), group (characters 5–7), and other (characters 8-10) access

permissions using single-letter codes.

-----1----- 2- --3--- ---4-- --5-- -----6------ ----7---

drwxr-xr-x@ 40 root wheel 1360 15 Dec 13:59 bin/

1234567890

Column |

Description |

|---|---|

1 |

file type and permissions |

2 |

number of links |

3 |

owner of file |

4 |

group owner of file |

5 |

file size in bytes |

6 |

time and date last modifed |

7 |

file name |

Permissions determine which users are allowed to view (r), run

(x), modify (w), or delete (w) a given file. The owner of

a file can alter the permissions using the chmod command. In the

above code block, the owner has privileges to modify, view, and run; and

the members of the group wheel have privileges to view and run.

File Type |

Access |

|---|---|

( |

( |

( |

( |

( |

Unix provides hundreds of useful tools. A brief summary of how to use

a particular command (the manual, or man pages) can be displayed by

typing man command at the command prompt. More detailed

instructions for some programs are provided through the GNU Texinfo

system.

$ man pwd

$ man bash

$ info bash

Within the man pages, press the down arrow key or return/enter to scroll down a single line and the space bar or n key to move forward one page. Press the h key to get a help screen with detailed navigation instructions. Press q to quit and return to the shell prompt. Within the Texinfo pages, press ? for a summary of basic commands. Press [ctrl-x][ctrl-c] to quit.

Try this on your own

Check out the “man pages” of a few of the commands listed below.

Command |

Description |

|---|---|

cd |

change directory |

pwd |

show the current working directory |

mkdir |

make a new directory |

rmdir |

remove an empty directory |

ls |

list a directory’s contents |

chmod |

change file permissions |

cp |

copy a file |

mv |

move a file |

rm |

remove a file |

touch |

update an existing file’s date stamp; create an empty file |

more |

view the contents of a long file page by page |

head |

show the beginning of a file |

tail |

show the end of a file |

rs |

reshape tabular data |

bc |

simple calculator |

echo |

echo arguments to the screen |

man |

get instructions for a given command |

sort |

lexical sort |

uniq |

remove duplicate lines |

wc |

word count |

find |

search through the file heirarchy |

grep |

pattern finder |

sed |

the Unix Stream EDitor |

awk |

a line-based stream editor |

cat |

can be used to concatenate and/or print files |

The last four commands in this list (find, grep, sed, and

awk) are extremely powerful programs in their own right. find

and grep are used to perform regular pattern matching on files and

their contents. sed and awk are rather flexible stream editors

that implement programming languages of their own. (A stream is

a channel for serial data. For instance, Unix’s standard output stream,

stdout, directs text output to the terminal.) awk differs from

sed in that it reads files line by line and transforms them in

a specified way. Entire books have been devoted to these utilities, and

we can’t do them justice in these notes.

Try this on your own

Using the mkdir command, make two directories, parent and

child, at the same level of the file system hierarchy. With the

touch command, create an empty file inside the child

directory. Now move the child directory inside of parent with

the mv command. What happens when you try to remove parent

without first removing child? What happens when you try to

remove child without first removing its contents? Remember that

there are two distinct remove commands, one for directories and one

for files.

In the above exercise, the error messages visible in the terminal window are sent via stderr, the standard error stream.

Job control

Unix is a multitasking operating system that can run many programs (or

jobs) at once. Whenever you type a command into the shell, that command

is run until it is completed. The output of that command is dumped to

stdout and thus appears in the terminal window. If you do not want

a long-running job to commandeer the shell, you have the option of

running it in the background. This is accomplished by appending an

ampersand (&) to the command. It is also possible to kill

([ctrl-c]) or suspend ([ctrl-z]) a job running in the

foreground.

Action |

Result |

|---|---|

|

suspend the current job |

|

terminate the current job |

|

list all jobs |

|

move a job to the foreground |

|

move a job to the background, as if it had been started with an ampersand ( |

|

suspend a job |

|

terminate a job |

|

process status |

|

show statistics for all running jobs |

|

set job priority |

The simplest way to refer to a job is with the combination of a percent

character (%) followed by the job’s number. The symbol pair %+

refers to the current job and %- to the previous job. In any output

from the shell or the jobs command, the current job is always

flagged with a + and the previous job with a -.

$ ./my-program

[ctrl-z]

[1]+ Stopped ./my-program

$ ./my-other-program

[ctrl-z]

[2]+ Stopped ./my-other-program

$ jobs

[1]- Stopped ./my-program

[2]+ Stopped ./my-other-program

$ bg %1

[1] - Running ./my-program

$ kill %2

[2] + Terminated ./my-other-program

$ ./my-other-program &

[2] 49530

$ jobs

[2]+ Running ./my-other-program

Jobs can also be referred to using the process identifier (PID) provided

by the operating system. This number is the one reported in ps and

top; in the block above, my-other-program’s PID is 49530.

Pattern matching

One key advantage that a shell has over graphical file managers is the ability to select files based on pattern matching of the filename—that is, the ability to recognize specific sequences of letters and numbers in a filename. This feature is implemented using special symbols called wildcards.

Symbol |

Meaning |

|---|---|

|

matches zero or more characters |

|

matches exactly one character |

|

matches exactly one character listed |

|

matches exactly one character in the given range |

|

matches any one character that is not listed |

|

matches any character that is not in the given range |

|

matches any alphabetical character |

|

matches any upper-case alphabetical character |

|

matches any lower-case alphabetical character |

|

matches any alpha-numeric character |

|

matches any single digit |

|

matches any piece of punctuation |

|

matches exactly one entire word from the comma-separated list |

(Linux also supports an extended regular expression format that allows

for more complex matching. See man re_format. These can be activated

in BASH by setting the extglob shell option with shopt.)

The following four commands illustrate how pattern matching works. The

first removes every file from the current directory (since * matches

every possible sequence of letters and numbers that could exist in

a file name). The second moves all html files that have physics in

their name to a directory called html. The third lists all files

pic1, pic2 or pic3 that have the file extension jpg,

png, or eps. And the fourth copies all files with a single

character preceding the .dat extension into the directory specified.

$ rm *

$ mv *physics*.html html

$ ls pic[1-3].{jpg,png,eps}

$ cp ?.dat my-directory

$ vim -p lab1/binomial.{cpp,jl,py,rs}

Each of these actions would be rather tedious to perform via point-and-click with a mouse.

Try this on your own

Write a BASH command that lists the names of all files and directories in the current working directory that do not begin with a vowel.

Since *, ?, and ! (as well as $, <, >, and

|, as we shall see shortly) have special meanings, BASH provides

access to those characters when they are preceded by a backslash. These

are examples of escape sequences. Alternatively, any of these

characters can be written directly if they are enclosed in single

quotes. All but the excalamation point and dollar sign can be written

directly in double quotes.

$ echo \?\!\*\$\<\|\>\\\~

?!*$<|>\~

$ echo '?!*$<|>\~'

?!*$<|>\~

$ echo "?\!*\$<|>\~"

?!*$<|>\~

Spaces also need to be escaped since spaces are the shell’s natural

delimiter: i.e., mv initial values.txt backup.txt does not take the

file initial values.txt and rename it backup.txt; rather, the

shell assumes that initial, values.txt, and backup.txt are

three distinct command line arguments (which is an error as mv takes

only two). Although it is permitted to create file and directory names

that include spaces, this is a bad practice and should be avoided.

$ echo Five spaces:\!\ \ \ \ \ \!

Five spaces:! !

$ echo "Five spaces:\! \!"

Five spaces:! !

$ echo 'Five spaces:! !'

Five spaces:! !

$ mkdir "My Favourites"

$ touch "My Favourites/latest updates.txt"

$ ls My\ Favourites/latest\ updates.txt

My Favourites/latest updates.txt

Try this on your own

Execute a command that writes to the screen: Sally said, "Hello!"

Redirection and chaining

In addition to files on disk, Unix provides three special streams that

are always available, two of which have been mentioned already: stdin

(the keyboard), stdout (the screen), and stderr (error messages output

to the screen). These, and any other open files, can be redirected.

Redirection simply means capturing output from a file, command, program,

script, or even code block within a script and sending it elsewhere. For

instance, text entered at the command prompt after the echo command

would normally be sent, via the stdout stream, directly to the terminal.

It can be redirected, using the characters below, to appear in, say,

a text file instead. Such output can also be piped to another command,

where it serves as input.

Symbol |

Meaning |

|---|---|

|

stdout |

|

stderr |

|

the null stream |

|

redirect stdout to file (overwrite—replace existing file, if any) |

|

redirect stdout to file (append—add new data to the end of an already-existing file) |

|

redirect file to stdin |

|

redirect stderr to file |

|

pipe output of one command to input of another |

|

chain two commands, with the second executed iff the first returns an exit status of zero |

|

chain two commands, with the second executed iff the first returns a nonzero exit status |

|

evaluate expression in place |

|

group commands, evalute in a new subshell |

|

group commands, evalute in current shell |

|

format input as an argument list |

Note that we can redirect stdout and stderr separately:

$ cmd > stdout-file 2> stderr-file

Here, whatever output cmd generates that would normally be sent to

the screen (via stdout) is instead redirected (by >) to a file named

stdout-file; whatever error messages cmd generates that would

normally be sent through the stderr stream to the screen are instead

redirected (by 2>) to a second file, stderr-file.

The standard error output and the standard output can also be redirected to a single file:

$ cmd > stdall-file 2>&1

Here, > redirects the stdout output of cmd to a file,

stdall-file, and the 2> redirection command sends the stderr

output to &1, which is a stand-in for the stdout stream. Thus, the

stderr material is sent first to the stdout stream, then to

stdall-file, as all standard output is in turn redirected there.

It is also possible to suppress output to stderr entirely.

$ rmdir mydirectory

rmdir: /usr/home/ksdb/mydirectory: Directory not empty

$ rmdir mydirectory 2>&-

$ rmdir mydirectory 2> /dev/null

The first line attempts to remove a directory which is not empty.

rmdir sends a warning to the user via stderr. The next two lines

redirect the error messages which might appear after executing the

command to the null stream (which discards everything it’s fed). Whether

or not the error message is suppressed, the command will not execute

while the objection exists.

The next few examples illustrate how simple commands can be made to work together to produce complex actions.

$ whoami

ksdb

$ echo Hello, I am user $(whoami)

Hello, I am user ksdb

$ echo Hello, I am user $(whoami) > file1.txt

$ echo Goodbye > file2.txt

$ cat file1.txt file2.txt

Hello, I am user ksdb

Goodbye

$ echo Overwritten > file1.txt

$ echo Appended >> file2.txt

$ cat file1.txt file2.txt

Overwritten

Goodbye

Appended

Note that if standard output is redirected to a file that does not exist

yet, the file will be automatically created; the touch command is

unnecessary. Recall that cat allows for the reading and

concatenation of files; listing multiple files after the cat

command, as in the above case, results in their being sent to stdout

sequentially.

$ { echo first line; echo second line; echo third line; } > file.txt

$ cat file.txt

first line

second line

third line

$ echo "4+6" | bc

10

$ echo The sum 5+7 is equal to `echo 5+7 | bc`

The sum 5+7 is equal to 12

$ echo The product 6*2 is equal to $(echo 6*2 | bc)

The product 6*2 is equal to 12

Here, the result of the echo "4+6" command is piped from the

standard output stream to serve as input for the basic calculator

(bc) command. When 4+6 is entered into the basic calculator, the

result is computed. Remember that ` ` and $( ) cause the

contents to be evaluated as though they existed in a separate command

line; the result is then inserted into the present command line.

$ echo export PATH=$PATH:/usr/home/ksdb/bin >> ~/.bash_profile

Here, we are again reassigning the variable PATH to include not only

its present value (accessed by $PATH), but the appended directory

path /usr/home/ksdb/bin, as well, and exporting this new value to

hold across all shells in operation. The >> redirection command

redirects the standard output and appends it to the file following the

symbol, in this case, ~/.bash_profile. The tilde refers to your home

directory; .bash_profile is a startup script—a type of dot file

whose contents are read and executed every time a new terminal shell is

opened. Thus, the change to PATH’s value is not only valid for the

current session, across all shells, but will be a permanent change, as

the export command will be executed every time a terminal is opened.

$ echo I work all day | sed 's/day/night/'

I work all night

Recall that the sed command allows for the modification of data in

an input stream. Here, the input is I work all day, redirected from

stdout by the pipe character. The particulars of sed can be viewed

using the man command; here, the s denotes that a substitution

should be performed in the stream: the first item between slashes should

be replaced by the second.

In the following example, cat > is used to redirect keyboard input

(the stdin stream) to a file. The numbers typed subsequently by the user

are sent to this file; [ctrl-d] inserts an end-of-file (EOF) marker,

a special character which tells the shell when to stop reading

keystrokes.

$ cat > meas.dat

3.52 ## from here ...

3.21

3.79

3.66

3.21

3.31

3.52 ## ... to here is the recorded input

[ctrl-d]

The following example invokes the awk command, which reads through

meas.dat line by line and adds all the values in the first column to

a running sum, held by the variable x. The first column of the data

file (in this case, the only column) is accessed via $1; this

notation is common within data manipulation commands. A counter

variable, n, is also kept, and used in the computation of the

average after each line is read, sent via > to

running_average.dat.

$ awk '{ x += $1; n += 1; print x/n; }' meas.dat > running_average.dat

$ cat running_average.dat

3.52

3.365

3.50667

3.545

3.478

3.45

3.46

$ awk '{ x += $1; n += 1; print x/n; }' meas.dat | tail -n1

3.46

$ cat meas.dat | sort | uniq

3.21

3.31

3.52

3.66

3.79

$ cat meas.dat | sort | uniq | wc -l

5

Piping the awk output to the tail command results in the

printing of the output’s final line. cat meas.dat | sort | uniq

first pipes meas.dat to the sort command, which rearranges the

contents in ascending order. This ordered file is then piped to the

uniq command, which filters out duplicate values. wc is a word

and byte counting utility; the option -l results in the number of

lines of input being produced. Hence, we determine that meas.dat

contains five unique lines.

BASH offers several features to save you keystrokes. File completion

is invoked by the tab key. For example, rather than enter cat

running_average.dat in the example above, you could just type cat

run[tab] and allow BASH to fill in the rest. Access to the command

history is available via the up and down arrow keys. This means that

the final command issued above can be achieved with [up-arrow] | wc

-l.

Numerical data is often stored in text files using a columnar format. There are a variety of tools that allow you to select and manipulate columns.

$ jot 21 0.00 1.00 | rs 3 7 | tee array.txt | rs -T > arrayT.txt

$ cat array.txt

0.00 0.05 0.10 0.15 0.20 0.25 0.30

0.35 0.40 0.45 0.50 0.55 0.60 0.65

0.70 0.75 0.80 0.85 0.90 0.95 1.00

$ awk '{ print $1 }' arrayT.txt > col1.txt

$ awk '{ print $2 }' arrayT.txt > col2.txt

$ awk '{ print $3 }' arrayT.txt > col3.txt

$ paste col3.txt col2.txt col1.txt

0.70 0.35 0.00

0.75 0.40 0.05

0.80 0.45 0.10

0.85 0.50 0.15

0.90 0.55 0.20

0.95 0.60 0.25

1.00 0.65 0.30

$ echo The sum of the values in column two is \

$(awk '{ x += $1; } END { print x; }' col2.txt)

The sum of the values in column two is 3.5

Above, jot outputs 21 numbers equally spaced between 0.00 and 1.00.

This output is piped to rs, which reshapes the data, organizing it

into three rows and seven columns. This output is in turn piped to the

tee command, which makes a copy of the output in the file

array.txt. A final pipe to the reshaping command reorganizes the

data into three columns and seven rows, and > sends this transposed

version of the data to arrayT.txt.

The Unix philosphy emphasizes small command line tools with narrow

capabilities that can be chained together to produce complex results and

behaviours. Input and output takes the form of a text stream, and the

output of one program can serve as the input for another using the pipe

command (|).

For instance, the following set of instructions lists the dozen most

common words appearing in the file katex-math.css

$ tr -cs [:alpha:] '\n' < katex-math.css | sort | uniq -c | sort -rn | head -n 12

8 katex

7 right

7 padding

6 px

6 em

6 display

4 eqno

3 size

3 number

3 margin

3 left

3 font

Now let’s plot the frequency histogram for the 101 most common words in Jane Austen’s Pride and Prejudice, the most downloaded book on Project Gutenberg.

$ curl -k https://www.gutenberg.org/files/1342/1342-0.txt -o Pride-and-Prejudice.txt

$ tr -cs [:alpha:] '\n' < Pride-and-Prejudice.txt | sort | uniq -c | sort -rn > freq-hist.dat

$ head -n5 freq-hist.dat

4218 the

4187 to

3707 of

3503 and

2138 her

$ gnuplot

> set terminal dumb

> set tics out

> unset key

> plot[0:100] "freq-hist.dat" using 0:1 with impulse title ""

+ + + + + +

4500 +-+---------------------------------------------------------------+-+

|* |

4000 +-|* |-+

|* |

3500 +-|** |-+

|** |

3000 +-|** |-+

|** |

2500 +-|** |-+

|** |

|*** |

2000 +-|***** |-+

|****** |

1500 +-|******* |-+

|************ |

1000 +-|************** |-+

|******************** |

500 +-|*********************************** |-+

|***************************************************************|

0 +-+---------------------------------------------------------------+-+

+ + + + + +

0 20 40 60 80 100

Scripts

Scripts are sequences of commands that are collected in a single file

and executed together. Unix scripts begin with the shebang (#!)

followed by the complete path name of the script interpreter—in this

case #!/bin/bash. Subsequent lines beginning with a hash (#) are

ignored and thus provide a way of inserting comments into the script.

A script must be flagged as executable (chmod +x) before it can be

run.

$ whereis bash

/bin/bash

$ cat > square

#!/bin/bash

# let i range over the values one to four

for i in 1 2 3 4

do

# pipe i*i to the calculator and assign the result to j

j=$(echo $i*$i | bc)

echo $i squared is $j

done

exit

[ctrl-d]

$ chmod +x square

$ ./square

1 squared is 1

2 squared is 4

3 squared is 9

4 squared is 16

$ ./square | grep 9

3 squared is 9

$ ./square | grep 1

1 squared is 1

4 squared is 16

Remember that grep searches through a file line by line and prints

any that contain the pattern specified. Above, i is a BASH variable

and $i is its current value. Note that variables in a script are

just strings of characters and have no numeric sense—unless they are

specifically interpreted as numbers, e.g, by piping to bc or by

using the BASH keyword let or BASH’s double-parenthesis environment

(( … )):

$ cat > myscript

#!/bin/bash

A=1

B=1

C=1

let A=$A+1

B=$B+1

C=C+1

echo A $A

echo B $B

echo C $C

((B+=1))

echo B $B

exit

[ctrl-d]

$ chmod +x myscript

$ ./myscript

A 2

B 1+1

C C+1

B 3

Here is an implementation of a simple counter:

$ cat > files

#!/bin/bash

i=1

while (( $i < 12 ))

do

echo This is the content of file$i.txt > file$i.txt

echo Oh, and this too\! >> file$i.txt

let i=i+1

done

exit

[ctrl-d]

$ chmod +x files

$ ./files

$ ls

file1.txt file2.txt file5.txt file8.txt

file10.txt file3.txt file6.txt file9.txt

file11.txt file4.txt file7.txt files

file1.txt file16.txt file4.txt file9.txt

$ ls file?.txt

file1.txt file3.txt file5.txt file7.txt file9.txt

file2.txt file4.txt file6.txt file8.txt

$ ls file1*.txt

file1.txt file10.txt file11.txt

$ cat file4.txt

This is the content of file4.txt

Oh, and this too!

A script may read in arguments from the command line, entered on the

same line as the executable call separated by spaces. The symbols

$1, $2, $3, … refer to the first, second, third …

arguments, respectively. The number of arguments passed can be queried

with $#. The complete set of arguments is referred to by $* or

$@.

$ cat > argc

#!/bin/bash

echo $#

[ctrl-d]

$ chmod +x argc

$ ./argc

0

$ ./argc this

1

$ ./argc this is

2

$ ./argc this is a test

4

$ cat > bookends

#!/bin/bash

if [[ -z $1 ]]

then

echo usage: $0 filename

exit 1

fi

echo $1:

head -n1 $1

echo .

echo .

tail -n1 $1

exit

[ctrl-d]

$ chmod +x bookends

$ ./bookends

usage: bookends filename

$ cat > fox.txt

The

quick

brown fox

jumped over the

lazy dog

[ctrl-d]

$ ./bookends fox.txt

fox.txt:

The

.

.

lazy dog

Quotes

There are many subtleties to the use of quotes in BASH. The

backward-style single quotes, located above the [tab] button on the

keyboard, are used to evaluate and expression in place.

$ echo `echo 4+7 | bc`

11

The echo 4+7 | bc has been evaluated in place, then acted upon by

the first echo command. The straight-style quotations (located to

the left of [return]) come in two varieties. The single quote

signifies that everything contained within is pure text, and no

replacements are to be made.

$ echo '$PATH'

$PATH

The double straight quotes are used to signify that the contents are

acting as a unit. For instance, double quotations placed around multiple

words in the command line of a script invocation allow for them to act

as a single argument. Double quotations are also used in conjunction

with the echo command to signify blocks of material that are to be

echoed. Special characters within double quotes, with the exception of

the exclamation mark, need no escape sequences. Unlike with single

straight quotes, replacements and actions are carried out within the

double quotations.

There’s a subtle difference in how the quoted expressions "$@" and

"$*" are expanded. The former is understood to mean "$1" "$2" "$3"

..., and the latter, "$1 $2 $3 ...".

$ cat > quotes

#!/bin/bash

echo ranging over '$@'

for x in $@; do echo " -" $x; done

echo ranging over '$*'

for x in $*; do echo " -" $x; done

echo ranging over '"$@"'

for x in "$@"; do echo " -" $x; done

echo ranging over '"$*"'

for x in "$*"; do echo " -" $x; done

[ctrl-d]

$ chmod +x quotes

$ ./quotes one two "three and a half" four

ranging over $@

- one

- two

- three

- and

- a

- half

- four

ranging over $*

- one

- two

- three

- and

- a

- half

- four

ranging over "$@"

- one

- two

- three and a half

- four

ranging over "$*"

- one two three and a half four

Indentation in scripts has no effect other than to aid readability. It

is also not strictly necessary that all scripts terminate with the

exit command; the program will stop when it reaches the end of the

script. Sometimes, though, and especially when dealing with control

structures, a forced quit may be desired, and may be achieved via an

exit or an exit 1 (if an error message is desired to be sent)

command placed in the script.

Variables

A BASH variable is just a string associated with a name. A variable is

assigned with the = operator and recalled from memory with the $

prefix.

$ EXE_PATH=/usr/bin

$ EXE=wc

$ echo $EXE_PATH/$EXE

/usr/bin/wc

$ ls -l $EXEPATH/$EXE

-r-xr-xr-x 1 root wheel 38704 31 May 2008 wc

An alternate brace form ${} is used wherever concatenation might

lead to ambiguity about which characters belong to the variable name.

$ NUM=0001

$ FILENAME1=infile-$NUM.xml

$ FILENAME2=outfile${NUM}a.yaml

$ FILENAME3=outfile${NUM}b.json

$ echo $FILENAME1 $FILENAME2 $FILENAME3

infile-0001.xml outfile0001a.yaml outfile0001b.json

Certain variables are predefined:

$ echo The BASH binary is located in $BASH

BASH is located in /bin/bash

$ echo My home directory is $HOME

/usr/home/ksdb

$ echo I am currently in directory $PWD

/usr/home/ksdb

$ echo $RANDOM is a pseudorandom number selected from the range 0-32767

15028 is a pseudorandom number selected from the range 0-32767

The % and # symbols can be used to strip trailing and leading

characters, respectively, from a variable’s string value. It’s common to

want to remove a file extension or the path prefix.

$ FILENAME=output.dat; echo ${FILENAME%.dat}{.eps,.xml}

output.eps output.xml

$ echo in${FILENAME#out}

input.dat

Various forms of pattern matching are also possible.

$ PATTERN="L*/debug-?/"

$ FILENAME=L32/debug-A/outfile.dat

$ echo ${FILENAME#$PATTERN}

outfile.dat

The # used in the prefix position can also be used to compute the

string length. Subranges of strings are specified with positional

indices separated by a colon:

$ VAR="this particular string"

$ echo $VAR is ${#VAR} characters long

this particular string is 22 characters long

$ echo T${VAR:1:4} S${VAR:17}

This String

Note that the range is exclusive of the value beginning the interval and inclusive of the last. If no end bracket is specified, the remainder of the string is printed.

$ cat > var

#!/bin/bash

FILENAME1=config-0027.xml

FILENAME2=${FILENAME1%.xml}.dat

echo $FILENAME1 "-->" $FILENAME2 "-->" ${FILENAME1/config/output}

ROOTNAME=${FILENAME1%.xml} #remove '.xml' from 'config-0027.xml'

COUNT=${ROOTNAME#config-} #remove 'config-' from 'config-0027'

echo The file number is $COUNT

[ctrl-d]

$ chmod +x var

$ ./var

config-0027.xml --> config-0027.dat --> output-0027.dat

The file number is 0027

The arrow text, -->, is quoted to prevent > from being

interpreted as the redirection operator.

Array variables can be assigned with multiple space-separated elements

enclosed in parentheses. Both the full array and the individual elements

are accessible via the ${[]} notation.

$ cat > arrayscript

#!/bin/bash

A=( zero one two three four )

echo The array \"${A[*]}\" has ${#A[*]} elements

for (( i=0; i < ${#A[*]}; ++i))

do

echo element $i is ${A[i]}

done

[ctrl-d]

$ chmod +x arrayscript

$ ./arrayscript

The array "zero one two three four" has 5 elements

element 0 is zero

element 1 is one

element 2 is two

element 3 is three

element 4 is four

Remember that the wildcard * refers to every possible combination of

text characters, so ${A[*]} conjures up every element of A. Invoking

${A[?]}, on the other hand, would at most return array elements

0–9.

$ cat > order

#!/bin/bash

if (( $# > 0 ))

then

A=($@)

{ for (( n=0; n<$#; ++n )); do echo ${A[n]}; done } | sort

fi

[ctrl-d]

$ chmod +x order

$ ./order apple car soda bottle boy

apple

bottle

boy

car

soda

$ ./order apple car "soda bottle" boy

apple

bottle

car

soda

The example above has a subtle error. Only four of the five words

appear, since "soda bottle" was entered as a single argument. The

for loop ranges over 0, 1, 2, and 3, since there are

only four arguments. The array, however, makes no distinction between

apple car "soda bottle" boy and `apple car soda bottle boy,

reading five elements either way. Thus, the last element of the array is

not echoed.

Try this on your own

The example above is not carefully written. Add quotes to the program so that it correctly outputs the following.

$ ./order apple car "soda bottle" boy

apple

boy

car

soda bottle

Shell arithmetic

Bash arithmetic operations are carried out with the $(( )) or $[

] notation. Arithmetic operations are only valid for integers, and

fractional results are rounded. The basic calculator (bc) should be

used instead if non-integer values are required.

$ echo 1+1

1+1

$ echo $((1+1))

2

$ echo $[1+1]

2

$ echo $[4/5]

0

$ echo 4/5 | bc -l

.80000000000000000000

The operators and their precedence, associativity, and values are the same as in the C language. The following list of operators is grouped into levels of equal-precedence operators and listed in order of decreasing precedence.

Symbol |

Operation |

|---|---|

|

variable post-increment and decrement |

|

variable pre-increment and decrement |

|

unary plus and unary minus |

|

logical and bitwise negation |

|

exponentiation |

|

multiplication, division, and remainder |

|

addition and subtraction |

|

left and right bitwise shifts |

|

comparison |

|

equality and inequality |

|

bitwise AND |

|

bitwise exclusive OR |

|

bitwise OR |

|

logical AND |

|

logical OR |

|

conditional operator |

|

assignment |

Control structures

Bash provides mechanisms for looping (for, while, until) and

branching (if and case). The reserved word for has a syntax

for ranging over elements.

$ for colour in red green blue; do echo $colour-ish; done

red-ish

green-ish

blue-ish

$ cat > under10.bash

for i in 1 2 3 4 5 6 7 8 9

do

echo $i

done

[ctrl-d]

$ chmod +x under10.bash

$ ./under10.bash

1

2

3

4

5

6

7

8

9

The for construction causes everything inside the do …

done block to be executed repeatedly (here, nine times) with i

taking the values one to nine in turn.

A contiguous range of integer values can be covered as follows.

$ for i in {-5..10}; do echo $i; done | rs .

-5 -4 -3 -2 -1 0 1 2 3 4 5 6 7 8 9 10

It’s important that the range {from..to} contain

no spaces.

The bash for loop also supports a C-like syntax for counting. The

following code sums the numbers 1 to 9 and displays all powers of two

less than 200.

$ let j=0; for ((i=1;i<10;++i)); do let j+=$i; done; echo $j

45

$ for (( i=1; i<200; i*=2 )); do echo $i; done

1

2

4

8

16

32

64

128

Try this on your own

Write a chain of BASH commands that outputs the dollar amounts $1.00, $1.25, $1.50, …, $9.75 arranged in four columns.

The while keyword executes a code block as long as a specified

condition is met.

$ jot 24 | rs 4 | rs -T | tee array.txt

1 7 13 19

2 8 14 20

3 9 15 21

4 10 16 22

5 11 17 23

6 12 18 24

$ cat array.txt | while read a b c d; do echo $c $a; done

13 1

14 2

15 3

16 4

17 5

18 6

$ { while read a b c d; do echo $c $a; done } < array.txt

13 1

14 2

15 3

16 4

17 5

18 6

$ awk '{ print $3, $1}' array.txt

13 1

14 2

15 3

16 4

17 5

18 6

As well as in the above case, where while appears next to a function

whose ability to execute determines the longevity of the loop, while

can also appear next to a conditional expression, which evaluates to

true or false, in double parentheses.

$ cat > inc

#!/bin/bash

let i=0

while (( $i < 5 ))

do

echo $i

let i+=1

done

for ((j=0; j<5; ++j))

do

echo $j

done

[ctrl-d]

$chmod +x inc

$ ./inc

0

1

2

3

4

0

1

2

3

4

until is similar to while, but the conditional in double

parentheses contains the stopping condition.

$ cat > inc

#!/bin/bash

let i=0

until (( $i = 5 ))

do

echo $i

let i+=1

done

for ((j=0; j<5; ++j))

do

echo $j

done

[ctrl-d]

$chmod +x inc

$ ./inc

0

1

2

3

4

0

1

2

3

4

if allows for the conditional execution of a block of code.

if (( $i < 10 ))

then

let i=i+1

fi

if and the conditional test are a single statement. The word

then is a new command, either appearing on the next line preceded by

a semicolon. The block is terminated by fi, the word if written

backwards. then and fi are analogous to do and done that

appear in the for, while, and until loops. It is possible to

have multiple cases covered by additional elif code blocks:

if (( $i = 5 ))

then

echo "i is five"

elif (( $i = 6 ))

echo "i is six"

elif (( $i = 7 ))

echo "i is seven"

else

echo "i is neither five, six, or seven."

fi

A final, default block of code may be specified beneath else.

Looping structures based on for, while, if, and until

can be arbitrarily nested:

$ cat > concat

#!/bin/bash

for prefix in A B C

do

for suffix in one two three

do

echo $prefix$suffix

done

done

exit

[ctrl-d]

$ chmod +x concat

$ ./concat

Aone

Atwo

Athree

Bone

Btwo

Bthree

Cone

Ctwo

Cthree

Evaluation of expressions

Within a BASH script, control is often dependent upon a conditional

expression. An expression can be purely arithmetic in nature, in which

case it should be evaluated in the double-parentheses environment, ((

expression )). An expression that is boolean, on the other hand,

should be enclosed in double-braces, [[ expression ]].

Multiple tests of the same nature can be combined within one set of

brackets with the logical operations ! (negation), &&

(and—both sub-expressions must be true for the entire expression to

evaluate to true), || (or—one or both of the sub-expressions must

be true for the entire expression to evaluate to true), and () (to

enclose various nested divisions of the expression).

$ cat > frac_arithmetic

#!/bin/bash

for (( i=1; i<=10; ++i ))

do

echo 1/$i^2 = `echo "1.0/"$i"^2" | bc -l`

done

$ chmod +x frac_arithmetic

$ frac_arithmetic

1/1^2 = 1.00000000000000000000

1/2^2 = .25000000000000000000

1/3^2 = .11111111111111111111

1/4^2 = .06250000000000000000

1/5^2 = .04000000000000000000

1/6^2 = .02777777777777777777

1/7^2 = .02040816326530612244

1/8^2 = .01562500000000000000

1/9^2 = .01234567901234567901

1/10^2 = .01000000000000000000

$ cat > arithmetic

#!/bin/bash

i=23

let i=i+1

let j=2*$i+7

if (( $i < $j ))

then

echo $i "<" $j

elif (( $i == $j ))

then

echo $i = $j

else

echo $i ">" $j

fi

[ctrl-d]

The [[ ]] environment recognizes the following logical tests:

Test |

Condition |

|---|---|

|

True if |

|

True if file exists and is a directory |

|

True if file exists |

|

True if file exists and is a regular file |

|

True if file exists and is readable |

|

True if file exists and has a size greater than zero |

|

True if file exists and is writable |

|

True if file exists and is executable |

|

True if file exists and has been modified since it was last read |

|

True if |

|

True if |

|

True if the length of |

|

True if the length of string is non-zero |

|

True if the strings are equal |

|

True if the strings are not equal |

|

True if |

|

True if string1 sorts after string2 lexicographically |

|

|

These arithmetic binary operators return true if arg1 is equal to,

not equal to, less than, less than or equal to, greater than, or greater

than or equal to arg2, respectively. Both arg1 and arg2 must

be (positive or negative) integers

$ touch physics.dat

$ cat > logical

#!/bin/bash

if [[ -e physics.dat ]]

then

echo "physics.dat exists"

fi

if [[ -s physics.dat ]]

echo "physics.dat exists and is not empty"

fi

[ctrl-d]

$ chmod +x logical

$./logical

physics.dat exists

$ cat > physics.dat

E=mc^2

$./logical

physics.dat exists and is not empty

Beware of seemingly-mathematical comparisons. Within curved parentheses,

<, >, and = involve lexical comparisons. For example ((

5 < 16 )) is true, but [[ 5 < 16 ]] is not, since the string

16 comes before the string 5 in standard alphanumeric order. The

proper integer comparision is [[ 5 -lt 16 ]].

$ for i in 0 1 2 3 4 5 6 7 8 9; do touch example$i.dat; done

$ ls

example0.dat example2.dat example4.dat example6.dat example8.dat

example1.dat example3.dat example5.dat example7.dat example9.dat

$ find . -name "example[^13579].dat"

./example0.dat

./example2.dat

./example4.dat

./example6.dat

./example8.dat

$ ls example[1-9].dat | xargs -n3 echo

example1.dat example2.dat example3.dat

example4.dat example5.dat example6.dat

example7.dat example8.dat example9.dat

$ ls example?.dat | xargs -n2 echo

example0.dat example1.dat

example2.dat example3.dat

example4.dat example5.dat

example6.dat example7.dat

example8.dat example9.dat

$ find . -name "example[12358].dat" -delete

$ ls

example0.dat example4.dat example6.dat example7.dat example9.dat

$ [[ -e example0.dat ]] && echo file exists

file exists

$ [[ -e example1.dat ]] || echo file does not exist

file does not exist

$ [[ -z $(cat example0.dat) ]] && echo file is empty

file is empty

Functions

A function is a block of code that is invoked with its short-hand name followed by zero or more parameters. Parameters are handled in the same way as arguments to a script.

$ cat > fibonnaci

#!/bin/bash

function add {

if [[ -z $1 || -z $2 ]]

then

echo function add requires two arguments >&2

exit 1

else

sum=$1

let sum+=$2

echo $sum

fi

}

x0=0

x1=1

for (( i=0; i<12; ++i ))

do

x2=$(add $x0 $x1)

echo $x2

x0=$x1

x1=$x2

done

[ctrl-d]

$ chmod +x fibonnaci

$ ./fibonnaci | rs 3

1 2 3 5

8 13 21 34

55 89 144 233

Useful idioms

One of the main uses for scripts is batch processing, i.e., launching

multiple instances of a program with different initial parameters.

Suppose that we have a program myprog1 that takes a command line

option --coupling-strength, computes some physical property that

depends on the value provided, and reports the answer to the stardard

output stream. The following script will run the program for the values

0.0, 0.2, …, 1.0 and store the results in files

output-J0.0.dat, output-J0.2.dat, …, output-J1.0.dat,

collected in the runs1 directory.

$ cat > batch1

#!/bin/bash

EXE=/home/ksdb/bin/myprog1

[[ ! -e runs1 ]] && mkdir runs1

for J in `jot 6 0 1.0`

do

$EXE --coupling-strength=$J > runs1/output-J$J.dat

done

[ctrl-d]

$ chmod +x batch1

$ ./batch1

$ ls runs1

output-J0.0.dat output-J0.2.dat output-J0.4.dat

output-J0.6.dat output-J0.8.dat output-J1.0.dat

Consider another program myprog2 that takes a --lattice-size

parameter and dumps its results to a file outfile.dat in the current

working directory. The following script organizes the batch execution of

myprog2 invoked with various values of --lattice-size stored in

runs2/L16, runs2/L20, …, runs2/L48

#!/bin/bash

EXE=/home/ksdb/bin/myprog2

[[ ! -e runs2 ]] && mkdir runs2

TOP=$PWD # value of the current working directory

for L in 8 16 20 24 32 40 48

do

DIR=runs2/L$L

[[ ! -e $DIR ]] && mkdir $DIR && cd $DIR && $EXE --lattice-size=$L

cd $TOP

done

Now let’s consider how to collate and process the data. Suppose that

each outfile.dat contains a single comment line followed by three

columns of data. We can pick out the “magnetization” as follows.

$ head -n5 runs2/L8/outfile.dat

### sample ##### energy ##### magnetization ###

000000 43.77 697.6

000001 43.39 967.5

000002 45.11 928.2

000003 46.35 928.2

$ awk '{ if (substr($1,0,1) != "#") print $3; }' runs2/L8/outfile.dat

697.6

967.5

928.2

928.2

.

.

(Note that substr is an awk function that picks out a substring

from a string of text.)

Suppose further that we want to summarize the magnetization data in all

the runs2 subdirectories in terms of an average and variance for

each lattice size. The following script does just that. Note that the

calculations have been encapsulated in functions average and

variance.

#!/bin/bash

function average {

awk 'BEGIN { x=0.0; n=0; while(substr($1,0,1) == "#"); } \

{ x += $3; n += 1; } \

END { print x/n; }' $1

}

function variance {

awk 'BEGIN { x=0.0; n=0; while(substr($1,0,1) == "#"); } \

{ x += ($3-'$2')**2; n += 1; } \

END { print sqrt(x/n); }' $1

}

rm runs2/mag.dat 2>&-

TOP=$PWD

for L in 8 16 20 24 32 40 48

do

DIR=runs2/L$L

FILE=runs2/L$L/mag.dat

if [ -e $FILE ]

then

L=${DIR#runs2/}

AVE=$(average $DIR/outfile.dat)

echo $L $AVE $(variance $DIR/outfile.dat $AVE) >> runs2/mag.dat

fi

done

One drawback to the script above is that the lattice sizes are hard

coded. If we perform an additional run for --lattice-size=64, then

we have to edit the collection script to include 64 in the for L

loop. Here’s another way to write the script that doesn’t require

advance knowledge of which L values have already been simulated. It

simply ranges over the directories it finds in runs2.

#!/bin/bash

function average {

awk 'BEGIN { x=0.0; n=0; while(substr($1,0,1) == "#"); } \

{ x += $3; n += 1; } \

END { print x/n; }' $1

}

function variance {

awk 'BEGIN { x=0.0; n=0; while(substr($1,0,1) == "#"); } \

{ x += ($3-'$2')**2; n += 1; } \

END { print sqrt(x/n); }' $1

}

rm /tmp/mag.dat 2>&-

for FILE in $(ls -d runs2/L*/outfile.dat)

do

STRIP=${FILE%/outfile.dat}

L=${STRIP#runs2/}

AVE=$(average $FILE)

echo $L $AVE $(variance $FILE $AVE) >> /tmp/mag.dat

done

sort -n -k1,1 /tmp/mag.dat runs2/mag.dat

rm /tmp/mag.dat 2>&-

This script has one subtlely. The text is initially dumped to

a temporary file /tmp/mag.dat which only becomes runs2/mag.dat

after it’s sorted. Why is this necessary? (Hint: there is a difference

between lexical and numerical order.)

$ mkdir L2

$ mkdir L5

$ mkdir L10

$ mkdir L20

$ mkdir L50

$ mkdir L100

$ ls -d L*

L10 L100 L2 L20 L5 L50

$ for x in $(ls -d L*); do echo ${x:1}; done | rs 1

10 100 2 20 5 50

$ ls -d L* | tr L " " | sort -n | rs 1

2 5 10 20 50 100

We should say a few words about how BASH can be used to automate the production of other scripts.

$ cat > plotter

for FILENAME in "$@"

do

echo plot \'$FILENAME\' using 1:2:3 with errorbars

echo pause -1

done

[ctrl-d]

$ chmod +x plotter

$ plotter runs1/outfile-J*.dat > view.gp

$ gnuplot view.gp

It is also possible to perform and visualize simple calculations right from the command line. For instance, the following bash session graphs the trajectory of a particle,

which has been launched from \((\mathsf{x_0, y_0}) = (\mathsf{40,600})\) with initial velocity \((\mathsf{v_{x,0},v_{y,0}}) = (\mathsf{30,30})\).

$ cat > col2ps

#!/bin/bash

echo "%!"

read a b

echo $a $b moveto

while read a b

do

echo $a $b lineto

done

echo 2 setlinewidth

echo stroke

echo showpage

[ctrl-d]

$ chmod +x col2ps

$ jot 131 0.00 13.00 | awk '{ print 40+30*$1, 600+30*$1-0.5*9.8*$1**2 }' - | ./col2ps > freefall.ps

$ gv freefall.ps

$ ps2pdf freefall.ps freefall.pdf

$ magick convert -density 150 freefall.ps -resize 25% -colorspace Gray freefall.png

This is hardly the best way to obtain such a plot, but it’s a cute demonstration of how we can chain together different unix tools to carry out complex tasks.

Try this on your own

Evaluate the oscillating behaviour \(x(t) = \sin(t) + 0.25\sin(2t)\) over the range \(t \in [0,10]\) with \(t\) sampled in steps of \(\Delta t = 0.1\). Output the values to a text file in two-column format.